Note

Go to the end to download the full example code.

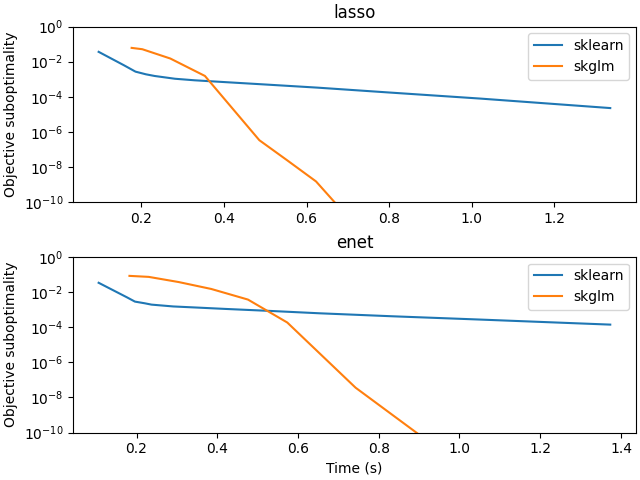

Timing comparison with scikit-learn for Lasso#

Compare time to solve large scale Lasso problems with scikit-learn.

file_sizes: 0%| | 0.00/26.8M [00:00<?, ?B/s]

file_sizes: 0%| | 24.6k/26.8M [00:00<03:30, 127kB/s]

file_sizes: 0%| | 49.2k/26.8M [00:00<03:30, 127kB/s]

file_sizes: 0%| | 106k/26.8M [00:00<02:11, 203kB/s]

file_sizes: 1%|▏ | 221k/26.8M [00:00<01:14, 358kB/s]

file_sizes: 2%|▍ | 451k/26.8M [00:00<00:40, 657kB/s]

file_sizes: 3%|▉ | 909k/26.8M [00:01<00:20, 1.24MB/s]

file_sizes: 7%|█▊ | 1.83M/26.8M [00:01<00:10, 2.38MB/s]

file_sizes: 14%|███▌ | 3.66M/26.8M [00:01<00:05, 4.50MB/s]

file_sizes: 20%|█████ | 5.23M/26.8M [00:01<00:05, 4.28MB/s]

file_sizes: 33%|████████▋ | 8.90M/26.8M [00:02<00:02, 7.96MB/s]

file_sizes: 37%|█████████▋ | 9.95M/26.8M [00:02<00:02, 7.29MB/s]

file_sizes: 43%|███████████▏ | 11.5M/26.8M [00:02<00:02, 7.52MB/s]

file_sizes: 49%|████████████▋ | 13.1M/26.8M [00:02<00:01, 7.69MB/s]

file_sizes: 55%|██████████████▏ | 14.7M/26.8M [00:02<00:01, 7.81MB/s]

file_sizes: 61%|███████████████▊ | 16.2M/26.8M [00:03<00:01, 7.91MB/s]

file_sizes: 67%|█████████████████▎ | 17.8M/26.8M [00:03<00:01, 7.96MB/s]

file_sizes: 72%|██████████████████▊ | 19.4M/26.8M [00:03<00:00, 8.01MB/s]

file_sizes: 78%|████████████████████▎ | 21.0M/26.8M [00:03<00:00, 8.05MB/s]

file_sizes: 84%|█████████████████████▉ | 22.5M/26.8M [00:03<00:00, 8.07MB/s]

file_sizes: 90%|███████████████████████▍ | 24.1M/26.8M [00:04<00:00, 8.07MB/s]

file_sizes: 96%|████████████████████████▉ | 25.7M/26.8M [00:04<00:00, 8.10MB/s]

file_sizes: 100%|██████████████████████████| 26.8M/26.8M [00:04<00:00, 6.24MB/s]

import time

import warnings

import numpy as np

from numpy.linalg import norm

import matplotlib.pyplot as plt

from libsvmdata import fetch_libsvm

from sklearn.exceptions import ConvergenceWarning

from sklearn.linear_model import Lasso as Lasso_sklearn

from sklearn.linear_model import ElasticNet as Enet_sklearn

from skglm import Lasso, ElasticNet

warnings.filterwarnings('ignore', category=ConvergenceWarning)

def compute_obj(X, y, w, alpha, l1_ratio=1):

loss = norm(y - X @ w) ** 2 / (2 * len(y))

penalty = (alpha * l1_ratio * np.sum(np.abs(w))

+ 0.5 * alpha * (1 - l1_ratio) * norm(w) ** 2)

return loss + penalty

X, y = fetch_libsvm("news20.binary"

)

alpha = np.max(np.abs(X.T @ y)) / len(y) / 10

dict_sklearn = {}

dict_sklearn["lasso"] = Lasso_sklearn(

alpha=alpha, fit_intercept=False, tol=1e-12)

dict_sklearn["enet"] = Enet_sklearn(

alpha=alpha, fit_intercept=False, tol=1e-12, l1_ratio=0.5)

dict_ours = {}

dict_ours["lasso"] = Lasso(

alpha=alpha, fit_intercept=False, tol=1e-12)

dict_ours["enet"] = ElasticNet(

alpha=alpha, fit_intercept=False, tol=1e-12, l1_ratio=0.5)

models = ["lasso", "enet"]

fig, axarr = plt.subplots(2, 1, constrained_layout=True)

for ax, model, l1_ratio in zip(axarr, models, [1, 0.5]):

pobj_dict = {}

pobj_dict["sklearn"] = list()

pobj_dict["us"] = list()

time_dict = {}

time_dict["sklearn"] = list()

time_dict["us"] = list()

# Remove compilation time

dict_ours[model].max_iter = 10_000

w_star = dict_ours[model].fit(X, y).coef_

pobj_star = compute_obj(X, y, w_star, alpha, l1_ratio)

for n_iter_sklearn in np.unique(np.geomspace(1, 50, num=15).astype(int)):

dict_sklearn[model].max_iter = n_iter_sklearn

t_start = time.time()

w_sklearn = dict_sklearn[model].fit(X, y).coef_

time_dict["sklearn"].append(time.time() - t_start)

pobj_dict["sklearn"].append(compute_obj(X, y, w_sklearn, alpha, l1_ratio))

for n_iter_us in range(1, 10):

dict_ours[model].max_iter = n_iter_us

t_start = time.time()

w = dict_ours[model].fit(X, y).coef_

time_dict["us"].append(time.time() - t_start)

pobj_dict["us"].append(compute_obj(X, y, w, alpha, l1_ratio))

ax.semilogy(

time_dict["sklearn"], pobj_dict["sklearn"] - pobj_star, label='sklearn')

ax.semilogy(

time_dict["us"], pobj_dict["us"] - pobj_star, label='skglm')

ax.set_ylim((1e-10, 1))

ax.set_title(model)

ax.legend()

ax.set_ylabel("Objective suboptimality")

axarr[1].set_xlabel("Time (s)")

plt.show(block=False)

Total running time of the script: (1 minutes 3.034 seconds)