Note

Go to the end to download the full example code.

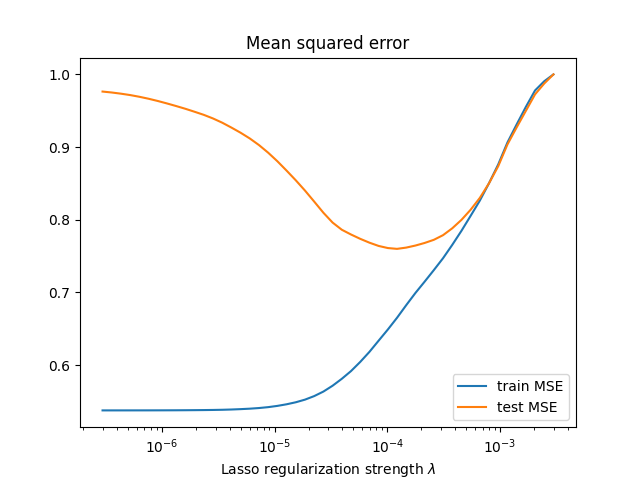

Show U-curve of regularization#

Illustrate the sweet spot of regularization: not too much, not too little.

We showcase that for the Lasso estimator on the rcv1.binary dataset.

import numpy as np

from numpy.linalg import norm

import matplotlib.pyplot as plt

from libsvmdata import fetch_libsvm

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

from skglm import Lasso

First, we load the dataset and keep 2000 features. We also retrain 2000 samples in training dataset.

X, y = fetch_libsvm("rcv1.binary")

X = X[:, :2000]

X_train, X_test, y_train, y_test = train_test_split(X, y)

X_train, y_train = X_train[:2000], y_train[:2000]

file_sizes: 0%| | 0.00/13.7M [00:00<?, ?B/s]

file_sizes: 0%| | 24.6k/13.7M [00:00<01:46, 129kB/s]

file_sizes: 0%| | 49.2k/13.7M [00:00<01:46, 128kB/s]

file_sizes: 1%|▏ | 106k/13.7M [00:00<01:06, 206kB/s]

file_sizes: 2%|▍ | 221k/13.7M [00:00<00:37, 359kB/s]

file_sizes: 3%|▉ | 451k/13.7M [00:00<00:20, 660kB/s]

file_sizes: 7%|█▊ | 909k/13.7M [00:01<00:10, 1.24MB/s]

file_sizes: 13%|███▍ | 1.83M/13.7M [00:01<00:05, 2.38MB/s]

file_sizes: 27%|██████▉ | 3.66M/13.7M [00:01<00:02, 4.63MB/s]

file_sizes: 53%|█████████████▉ | 7.33M/13.7M [00:01<00:00, 9.13MB/s]

file_sizes: 76%|███████████████████▊ | 10.5M/13.7M [00:01<00:00, 11.3MB/s]

file_sizes: 86%|██████████████████████▎ | 11.8M/13.7M [00:02<00:00, 9.71MB/s]

file_sizes: 100%|██████████████████████████| 13.7M/13.7M [00:02<00:00, 6.40MB/s]

Next, we define the regularization path.

For Lasso, it is well know that there is an alpha_max above which the optimal solution is the zero vector.

alpha_max = norm(X_train.T @ y_train, ord=np.inf) / len(y_train)

alphas = alpha_max * np.geomspace(1, 1e-4)

Let’s train the estimator along the regularization path and then compute the MSE on train and test data.

mse_train = []

mse_test = []

clf = Lasso(fit_intercept=False, tol=1e-8, warm_start=True)

for idx, alpha in enumerate(alphas):

clf.alpha = alpha

clf.fit(X_train, y_train)

mse_train.append(mean_squared_error(y_train, clf.predict(X_train)))

mse_test.append(mean_squared_error(y_test, clf.predict(X_test)))

Finally, we can plot the train and test MSE.

Notice the “sweet spot” at around 1e-4, which sits at the boundary between underfitting and overfitting.

plt.close('all')

plt.semilogx(alphas, mse_train, label='train MSE')

plt.semilogx(alphas, mse_test, label='test MSE')

plt.legend()

plt.title("Mean squared error")

plt.xlabel(r"Lasso regularization strength $\lambda$")

plt.show(block=False)

Total running time of the script: (0 minutes 14.056 seconds)